Security Program Best-Practices 5

July 25, 2013 7 Comments

Security Program Best-Practices – Part 5

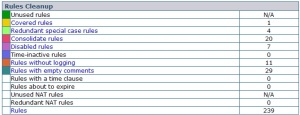

Security Program Best-Practices – Part 1 Part 2 Part 3 Part 4 Part 5This blog continues our Security Governance Series with the next installment of recommended security program best-practices drawn from a broad sample of assessments. In this blog we will discuss the final and most critical Gap 10 – Develop Firewall Rule Lifecycle Management.

Gap 10: Firewall Rule Lifecycle Management

Business Problem

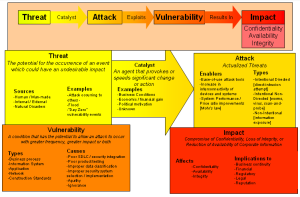

Firewalls are the first and continued line of defense for enterprises today, handling vast amounts of traffic across the network. On the perimeter alone firewalls filter millions of packets daily. The organizational security policy implemented in these firewalls requires the definition of hundreds and often thousands of rules and objects. Objects may include groups of servers, user machines, sub-networks in the data center, and networks in company branch offices or DMZs. The firewall rules define which type of applications and which network services are allowed to traverse between networks and which should be blocked.

Firewalls are Organic

Since business needs are dynamic, firewall policies are constantly being changed and modified. This continuous flux causes the firewall configuration to grow dramatically over time. A huge and subsequently complex firewall configuration is hard to manage and may require lengthy research in order to add or change a rule. Moreover, the complexity of the configuration decreases the firewalls performance and may lead to potential security breaches. For example, a rule was created to allow a temporary service to work for a limited time, but the administrator failed to delete the rule after the task was finished, introducing real security risks.

Finding unused rules that have not matched any traffic, duplicate rules, and rules that are covered by other rules is a complex manual task for the firewall administrator. It may take days of investigating just to locate such rules in huge firewall configurations, while at the same time the firewall is continuing to change daily due to user requests.

Gartner noted in a recent research note that …

“Through 2018, more than 95% of firewall breaches will be caused by firewall misconfigurations, not firewall flaws.”

Organizations need to develop a Firewall Rule Lifecycle Management process to clean up their firewall policies, easing the network security administrator’s job while boosting firewall performance and eliminating security holes.

Organizations need to identify and address, as follows:

- Unused rules: Rules that have not matched any packet during a specified time. Either the use of Cisco ACL hit counters, central ‘syslog’ logging or, commercial tools can be used for analysis to look at the firewall logs compare the actual traffic to the rules in the policy. Unused rules are ideal candidates for removal. Often the application has been decommissioned or the server has been relocated to a different address.

- Covered or duplicated rules: Rules that can never match traffic because a prior rule or a combination of earlier rules prevents traffic from ever hitting them. During firewall cleanup such covered rules can be deleted since they will be never used. Covered and Duplicated rules cause the firewall to spend precious time for free and decrease its performance.

- Disabled rules: Rules that are marked “disabled” and are not in operation. Disabled rules are ideal candidates for removal, unless the administrator keeps them for occasional use or for historical record.

- Time-inactive rules: Rules that were active for a specified time in the past and that time expired. Rules that were active for a specific period can become active again at the same time next year. Retaining such rules may create security holes.

- Rules without logging: Rules that are defined not to generate logs. Usually security best-practice guidelines dictate to log everything. Since log information consumes a large amount of disk space, administrators often configure highly used rules that control low risk traffic not to generate logs. Listing the rules without logs will help the administrator verifying that the lack of audit for these rules is not in contradiction to policy.

- Least used rules and most used rules: Rules that matched the smallest number of packets or the largest number over a predefined and configurable period of time. The rules usage statistics helps the administrator in the cleanup process for performance improvement: he may want to reposition most used rules in higher places in the configuration and least used rules in lower places. Rules with zero hit count may be removed.

- Rules with empty comments: Rules not documented, i.e., without a text explanation or reference # to the original change management request. Often policy requires an explanation for each rule so defining rules without comments are a violation of the policy. Some companies require entering a ticket number of the help desk trouble-ticketing application into the rule comment.

- Unattached objects: Objects that are not attached to any rule or unattached global object.

- Empty objects: Objects that do not contain any IP address or address range.

- Duplicate objects: Objects that already exist but are recreated contributing to the policy “bloat”.

- Unused objects: Objects whose address ranges didn’t match any packet during a specified time or unused global object.

By removing the unnecessary rules and objects, the complexity of the firewall policy is reduced. This improves management, performance increases, and removes potential security holes.

Cleanup Phase 1: Validation

The Validation phase involves manually (or with the use of public domain or commercial tools such as Algosec or Tufin) reviewing the Firewall Rules and performing a static analysis.

Items to be reviewed in this step are, as follows:

- Unattached Object / Unattached VPN User- Group – An object that:

- Does not appear in any rule

- Every group it belongs to does not appear in any rule

- In any policy on any firewall

- Empty Objects:

- Do not refer to any IP address

- Unattached VPN Users:

- Do not appear in any user group and have no access

- Unattached access-list (Cisco)

- Not connected to any interface

- Expired VPN users

- No longer have access

- Disabled Rules:

- Maybe it’s time to delete them?

- Time-Inactive rules:

- Timed Rules are active on a certain days of the month, days of the week, or times of the day…

- … But you cannot set a year.

- Identify the expired rules before they will become active again next year.

- Duplicate Rules

- Firewalls process the rules in-order “first match”

- If “early” rules match every packet that a “late” rule could match – the “late” rule is covered (== useless clutter!)

- Easy cases: single rule covers another rule the object names match exactly

- Duplicate Objects:

- Most FW Vendor consoles cannot answer the question “does this definition already exist with another name?”

- Result: Administrators often define the same object (Host, Subnet, or Group) multiple times

Cleanup Phase 2: Unused Rules

The Unused Rules phase involves Usage-based Analysis, i.e., focusing on what has changed recently and ensuring that the Firewall Rules are kept up-to-date and those rules that are no longer needed are flagged and/or removed so that the Firewall does not become unwieldy and risk conflicts or duplicates.

This step allows us to identify key and useful data, as follows:

- Unused Rules:

- have not matched traffic in the last NNN days

- Unused Objects:

- Do not belong to any rule that matched traffic in the last NNN days

- Most / Least used rules

- Last date that rule was used

- Even if it is listed as “unused” due to logging configuration settings

These considerations and notes should be borne in mind for this step, as follows:

- Over time:

- Applications are discontinued

- Servers are relocated to other IP addresses

- Test environments move to production

- Business partnerships change

- Networks are re-architected

- Routing is changed

- Result: Firewalls still have the rules – but the traffic is gone

- Idea: Track and flag rules and objects that have not been used “recently”

- Firewalls can log each matched packet

- Log includes rule number, timestamp, and more

- Basic approach:

- 1) Filter the logs based on rule number

- 2) Find the missing rule numbers and delete those rules

- Challenge #1: Logging is configured per rule

- Some rules are not configured to produce logs

- Solution #1: List rules that do not produce logs separately

- Challenge #2: Rule Insertions & Deletions change the rule numbers!

- Which rule corresponds to what was used to be called rule 101 in Nov’07?

- Makes long-term statistics unreliable

- Solution #2: Vendor attaches a unique “rule_id” to each rule, such that:

- Reported to log

- Remains with rule through any rule add/remove/modify

- Cisco Firewalls & Routers maintain a per-rule hit-counter

- Advantages:

- Unrelated to logging: un-logged rules are counted too

- Rule insertions & deletions do not affect the hit-counters

- Challenge:

- Hit-counters are reset to zero when device reboots

- Solution:

- Take periodic snapshots

- Attach pseudo rule_uids, homogenize the snapshots

- Make sure not to double-count …

- Some rules only work occasionally or rarely

- High-shopping season

- Disaster recovery rules – tested semi-annually

- Need usage information of many months

- Challenge:

- Log files can become huge – querying extended historical data can have a real impact on product log server

- Logs are discarded or rotated

- Hit-counters are occasionally set to 0

- Solution:

- Process the raw usage information frequently (daily)

- … But keep concise summaries available (forever)

Cleanup Phase 3: Performance Optimization

In order to provide a measurable attribute for firewall performance that will show the improvement of the policy optimization, there is a metric called Rules Matched Per Packet (RMPP).

RMPP is simply a calculation of the average number of rules the firewall tested until it reached the rule that matched a packet (including the matched rule). For example:

If the firewall policy consists of only one rule (allow or deny all) that matches everything – RMPP will be 1. If the firewall policy consists of 100 rules, such that rule #1 matches 20% of the packets, rule #10 matches 30% and rule #100 matches 50% of the packets:

RMPP = 1 * 20% + 10 * 30% + 100 * 50% = 0.2 + 3 + 50 = 53.2

Firewalls do in fact test the rules in sequence, one after another, until they reach the matching rule, and each tested rule contributes to the firewall’s CPU utilization. Therefore, optimizing the policy to decrease the RMPP score will decrease the firewall CPU utilization and greatly improve overall performance.

Building on the previous example, if rule #100 (that matches 50% of the packets) can be relocated to position #50 – without modifying the firewall policy decisions – the RMPP will be reduced significantly:

RMPP = 1 * 20% + 10 * 30% + 50 * 50% = 0.2 + 3 + 25 = 28.2

This simple change, which can be achieved by reordering the rules, can produce a 47% improvement in firewall performance.

Conclusion

Firewall administrators can achieve significant and measurable performance improvements for their complex firewalls by using these cleanup, lifecycle management and, policy optimization (with rule reordering) techniques. There are many commercial tools available that help in policy cleanup identifying rules that are unused, covered and disabled and should ideally be removed. This is in addition to unattached, empty, duplicate and unused objects. The tools help to eliminate security risks and keep the firewall policy well managed by alerting administrators.

The more veteran firewall audit vendor list includes: Tufin Software Technologies, AlgoSec, Secure Passage and Athena Security — and then RedSeal Systems and Skybox Security, which are primarily risk-mitigation tools, and so go beyond firewall audit to feature risk-assessment and risk-management capabilities.

Thanks for your interest!

Nige the Security Guy.