APT Threat Analytics – Part 2

March 14, 2014 4 Comments

APT Threat Analytics – Part 2

In today’s threat environment, rapid communication of pertinent threat information is vital to quickly detecting, responding and containing targeted attacks. The key to improving an organizations reaction time is a workflow and set of tools that allows threat information to be analyzed and actionable results communicated across the organization rapidly.

In this APT Threat Analytics – Part 2 blog, we will discuss the options for threat intelligence collaboration and sharing together with a current snapshot of the available tools and standards/developments to help determine whether your organization can benefit from an emerging dedicated in-house threat intelligence program.

Given the escalating threat landscape, improving threat detection is key for most organizations, specifically developing an intelligence-driven approach. This requires collecting and consolidating threat intelligence data from internal sources (e.g. systems and applications) and external sources (e.g. government or commercial threat feeds) and using analytics to spot attack indicators or anomalous behavior patterns.

The core team should have the capabilities to achieve good visibility and situational awareness across the organization.

- People: Internal and external networking for developing good sources of intelligence, communication for developing reports and presenting intelligence briefings

- Process: Designing an end-to-end intelligence process including obtaining and filtering data, performing analysis, communicating

actionable results, making a risk decision, and taking action - Technology: Analysis (drawing connections between seemingly disconnected data), and data analytics techniques

What is Threat Intelligence?

Threat intelligence means different things to different organizations, so it’s important to first define what threat intelligence means to your organization. The ultimate goals of threat intelligence gathering and sharing should be, as follows:

- Develop actionable intelligence

- Better intelligence translates to better protection

- Increased protection translates to less fraud and decrease in revenue loss

- Collective intelligence is far more effective than individual silos

- Both external and internal sources are needed to address targeted threats

In-House Threat Intelligence

With the increase in advanced, multidimensional threats, organizations can no longer depend solely on existing automated perimeter gateway tools to weed out malicious activity. More and more organizations are considering development of an in-house threat intelligence program, dedicating staff and other resources to network baselines, anomaly detection, deep inspection and, correlation of network and application data and activity.

With the advanced, blended, multidimensional, targeted cyber attacks being levied today, your organization still needs an experienced set of human eyes analyzing data collected —not to mention its reputation.

Performing in-house threat intelligence need not be complex or expensive. Such a program can be as simple as IT staff being trained to pay closer attention to data. In other cases, threat intelligence might mean having a team of people performing deep content inspection and forensics on a full-time basis. Where an organization falls in that range depends on various factors such as critical assets/data, risk tolerance, core competency, and so on. The organization may elect a DIY in-house model or a co-sourced partner.

One of the biggest benefits of taking control of internal threat intelligence is that it forces organizations to, as follows:

- Develop a deep understanding of –

- How systems are used

- How data is accessed

- Recognize traffic and usage patterns

- Pay attention to log data and to correlate that data with a known baseline of how users interact with data, applications and servers.

- Consolidate and manage log sources

With all of this data at an analyst’s fingertips, organizations can recognize the subtle anomalies that may indicate an attack—the main goal of your threat intelligence effort. For more information see the forthcoming blog: APT Anomaly Detection as well as the new NG Security Operations (SoC V2) series.

What would constitute a subtle anomaly? One example is inappropriate remote access to critical servers in your environment. Many organizations don’t bother to actively audit remote desktop access to critical servers, but what if out of the blue you detect repeated Remote Desktop Protocol (RDP) sessions and failed logins to a domain controller from a new system in your environment? Your gateway tools won’t help here, and this activity would certainly warrant investigation because it could indicate the beginning (or middle) of an advanced persistent threat (APT).

Picking the Right Tools

There are a few major hurdles when it comes to performing comprehensive cyber threat intelligence (CTI) in-house. Organizations need a core set of security tools to provide the essential foundational elements for performing threat intelligence. They should certainly consider leveraging external sources and service providers to fill in gaps in their defenses.

Some of the frameworks, tools, standards, and working groups to be considered are, as follows:

- OpenIOC – Open Indicators of Compromise framework

- VERIS – Vocabulary for Event Recording and Incident Sharing

- CybOX – Cyber Observable eXpression

- IODEF – Incident Object Description and Exchange Format

- TAXII – Trusted Automated eXchange of Indicator Information

- STIX – Structured threat Information Expression

- MILE – Managed Incident Lightweight Exchange

- TLP – Traffic Light Protocol

- OTX – Open Threat Exchange

- CIF – Collective Intelligence Framework

IODEF

Incident Object Description and Exchange Format (IODEF) is a standard defined by Request For Comments (RFC) 5070. IODEF is an XML based standard used to share incident information by Computer Security Incident Response Teams (CSIRTs). The IODEF Data Model includes over 30 classes and sub classes used to define incident data.

IODEF provides a data model to accommodate most commonly exchanged data elements and associated context for indicators and incidents

-

IODEF-Extensions For Structured Cybersecurity Information

-

Extension Classes:

-

Attack Pattern, Platform, Vulnerability Scoring, Weakness, Event Report, Verification, Remediation

-

-

Standards:

-

CAPEC, CEE, CPE, CVE, CVRF, CVSS, CWE, CWSS, OCIL, OVAL, XCCDF

-

OpenIOC

OpenIOC was introduced by Mandiant. It is used in Mandiant products and tools such as RedLine, but has also been released as an open standard. OpenIOC provides definitions for specific technical details including over 500 indicator terms. It is an XML-based standardized format for sharing Threat Indicators.

- Derived from years of “What Works” for Mandiant

- Indicator Terms

- Artifacts on Hosts and Networks

- Logical Comparisons

- Groupings, Conditions

- Ability to Store & Communicate Context

- Continues to be developed and improved

OpenIOC Process Flow

VERIS

The Vocabulary for Event Recording and Incident Sharing (VERIS) framework was released by Verizon in March of 2010. As the name implies VERIS provides a standard way for defining and sharing incident information. VERIS is an open and free set of metrics designed to provide a common language for describing security incidents (or threats) in a structured and repeatable manner.

- DBIR participants use the VERIS framework to collect and share data

- Enables case data to be shared anonymously to RISK Team for analysis

Vocabulary for Event Recording and Incident Sharing (VERIS) Overview

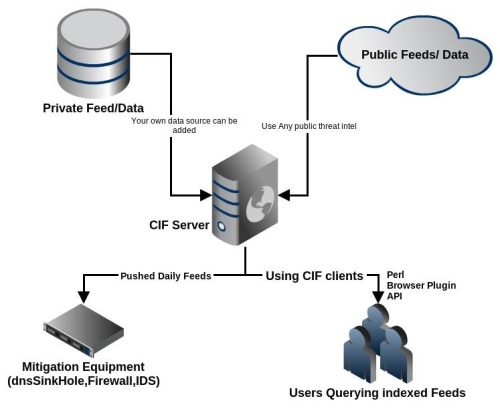

CIF

The Collective Intelligence Framework (CIF) is a client/server system for sharing threat intelligence data. CIF was developed out of the Research and Education Network Information Sharing and Analysis Center (REN-ISAC). CIF includes a server component which collects

and stores threat intelligence data. Data can be IP addresses, ASN numbers, email addresses, domain names and uniform resource locators (URLs) and other attributes. The data can be accessed via various client programs.

- CIF is a cyber threat intelligence management system

- Can combine known malicious threat information from many sources

- Use information for action: identification (incident response), detection (IDS) and mitigation

CybOX

CybOX is used for defining details regarding measurable events and stateful properties. The objects that can be defined in CybOX can be used in higher level schemas like STIX. The goal of CybOX is to enable the ability to automate sharing of security information such as CTI. It does this by providing over 70 defined objects that can be used to define measurable events or stateful properties. Examples of objects are File, HTTP Session, Mutex, Network Connection, Network Flow and X509 Certificate.

STIX

Mitre developed Structured Threat Information Expression (STIX) is for defining threat information including threat details as well as the context of the threat. STIX is designed to support four cyber threat use cases:

- Analyzing cyber threats

- Specifying indicator patterns

- Managing response activities

- Sharing threat information

It uses XML to define threat related constructs such as campaign, exploit target, incident, indicator, threat actor and TTP.

Structured Threat Information Expression (STIX) Architecture

STIX provides a common mechanism for addressing structured cyber threat information across wide range of use cases:

- Analyzing Cyber Threats

- Specifying Indicator Patterns for Cyber Threats

- Managing Cyber Threat Response Activities

- Cyber Threat Prevention

- Cyber Threat Detection

- Incident Response

- Sharing Cyber Threat Information

TAXII

Mitre developed Trusted Automated eXchange of Indicator Information (TAXII) supports sharing of threat intelligence data. The Mitre definition for TAXII states, “Defines a set of services and message exchanges for exchanging cyber threat informationThese models allow for push or pull transfer of CTI data. The models are supported by four core services:

- Discovery,

- Feed management,

- Inbox

- Poll

OTX

Open Threat Exchange (OTX) is a publicly available service created by Alien Vault for sharing threat data. AV-OTX cleanses aggregates, validates and publishes threat data streaming in from a broad range of security devices across a community of more than 18,000 OSSIM and Alien Vault deployments. OTX is a centralized system for collecting threat intelligence. It is provided by AlienVault and interoperates with their Open Source SIEM (OSSIM) system, where SIEM is Security Event and Information Management. OSSIM is free to use. OSSIM users can configure their system to upload their threat data to OTX.

MILE

The Managed Incident Lightweight Exchange (MILE) Working Group is working on standards for exchanging incident data. The group works on the data format to define indicators and incidents. It also works on standards for exchanging data. This group has defined a package of standards for threat intelligence which includes Incident Object Description and Exchange Format (IODEF), IODEF for Structured Cyber Security Information (IODEFSCI) and Real-time Inter-network Defense (RID).

Structured Cyber Security Standards

TLP

The Traffic Light Protocol (TLP) is a very straight forward and simple protocol. TLP is used to control what can be done with shared information. Shared information is tagged with one of four colors white, green, amber or red. The color designates what can be done with the shared information. Information tagged white can be distributed without restriction. Information tagged green can be shared within the sector or community, but not publicly. Information tagged amber may only be shared with members of their own organization. Information tagged red may not be shared. Given its simplicity TLP can be used verbally, with email or incorporated in to an overall system.

Conclusion – KISS

The simplest way to mine threat intelligence is to leverage the information already on your systems and networks. Many organizations don’t fully mine logs from their perimeter devices and public-facing web servers for threat intelligence. For instance, organizations could review access logs from their web servers and look for connections coming from particular countries or IP addresses that could indicate reconnaissance activity. Or they could set up alerts when employees with privileged access to high-value systems attract unusual amounts of traffic, which could then be correlated with other indicators of threat activity to uncover signs of impending spear-phishing attacks.

Many of the standards are a good fit for organizations with specific needs. If an organization wants to share incident data and be part of the analysis of a broad data set, then VERIS would be the best choice. If an organization wants to share indicator details in a completely public system, then OTX would be a reasonable choice. If an organization is using tools that support OpenIOC, then of course OpenIOC would be the best choice. If an organization is looking for a package of industry standards then the MILE package (IODEF, IODEF-SCI, RID) or the Mitre package (CybOX, STIX, TAXII) would be suitable. Both have the capability to represent a broad array of data and support sharing of that data.

As a recipe for success it is important to start small, start simple, prototype and evolve as organizations gain confidence and familiarity and grow the threat sources, communication and collaboration – so that there is valid data, analysis and, actionable results. Focus on and validate the process.

Next Steps

In future parts we will delve further with some practical use cases containing examples and implementations, review external feed sources (pros and cons), discuss triage, building context, validation of data, and performing analysis as well as discuss the development of organization threat profiles based on risk management process.

In addition, we will be publishing the APT Anomaly Detection blog that complements this work using network flows to baseline a network and detect anomalous activity, so that there isn’t reliance on just one methodology. We will also kick-off the Next Generation Security Operations series that seeks to tie all of the APT Series threads into a holistic architecture and defensive strategy (SoC V2).

Thanks for your interest!

Nige the Security Guy.

Pingback: APT Threat Analytics – Part 1 | Nige the Security Guy

Pingback: Security Series Master Index | Nige the Security Guy

Pingback: APT Strategy Series | Nige the Security Guy

Pingback: APT Strategy Guide | Nige the Security Guy