Architecture Case Study – Part 1

June 28, 2013 7 Comments

Architecture Case Study – Part 1

Architecture Case Study – Part 2

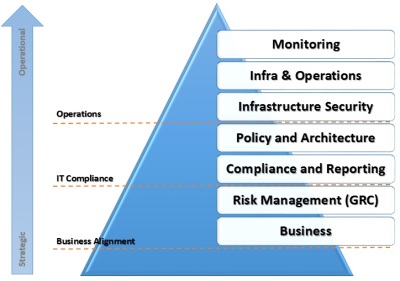

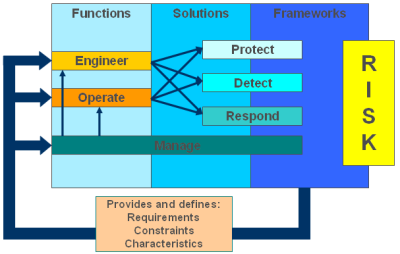

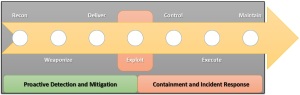

In the Security Architecture Series of blogs we have shared all of the steps involved in requirements gathering, baseline, product and solution selection and, through to realizing the architecture. This blog presents an Architecture Case Study that uses those principles and recommendations as a practical example. The illustration provides a conceptual simplified view of the program use case.

Part 1 (this blog) takes the reader from Architecture development through to the Technical Recommendation then Part 2 takes the reader from Design to Deployment strategy with Implementation and Migration.

Part 1 (this blog) takes the reader from Architecture development through to the Technical Recommendation then Part 2 takes the reader from Design to Deployment strategy with Implementation and Migration.

Program Overview

The overall goal of the project was to seek to standardize across the organization and all of the 20+ business units. The business units are primarily autonomous with different types of technology and infrastructure and at varying degrees of maturity and security. The status quo presented a series of risks to both the organization as well as each of the business units.

The cost/benefits were multiple not just in terms of standardization but also the total cost of ownership (TCO) and Return on Security Investment (ROSI) to purchase technology at volume discount while gaining increased visibility and support from the vendor(s). However, the primary goal and benefit was to establish and foster a spirit of collaboration, sharing and, cross-pollination to work together towards a common vision.

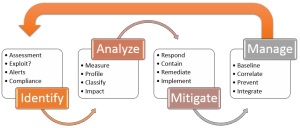

The overall high-level approach is defined, as follows:

- Develop Architecture

- Requirements

- Specify Functional Requirements (RFI)

- Request Information from the vendor community (Distribute RFI)

- Review RFI responses

- Select vendors for product/solution evaluation

- Vendor/Product Selection

- Conduct bake-off testing with business unit participation

- Review evaluation scorecard results

- Conduct pilot of highest ranked solution

- Review pilot results

- Technical Recommendation

- Develop Design

- Develop Implementation Program

- 4 Phases

- Alpha Test

- Beta Test

- Deploy/Execute

- 9 Step Program

Baseline Network Standard Architecture

I worked on the project as a consultant in the role of Program Technical Architect as part of the overall Architecture Governance and Steering Committee. My role was both to guide the direction and act as a technical lead as well as perform a lot of the detailed work to develop the actual deliverables based on collaboration and interaction.

Network Security Working Group (WG)

The first step was to develop an Network Security Working Group (WG) that included stakeholders from the various business units to contribute at two levels, as follows:

- Level 1 – A small representative sample of core members who were involved in the brainstorming sessions to represent their business unit and contribute input on both the architecture as well as unique requirements

- Level 2 – A stakeholder from every business unit who was involved in monthly or quarterly (as appropriate) review and approval of the emerging work product and progress to enable consensus and buy-in.

Collaboration was key to the success of the project. We wanted to involve stakeholders in every stage of the process and to ensure that their contribution was captured and recorded. Brainstorming sessions were used extensively at various locations with preparation to seed and stimulate the discussion with a facilitator as well as scribes to record and document.

Architecture Draft Review

A series of review cycles were used with a broader and broader audience to ensure that the architecture aligned with both current and future strategy and needs for the business units. The architecture document contains the following sections:

- Architectural Principles

- Network Models

- Physical Layer Design

- Supported Protocols

- Network Performance Architecture

- Network Security Architecture

- Areas

- Perimeters

- Zones

- Controls

- Management

- Network Management Architecture

- Enabling Services

- Appendix

- Profiling BU Network Traffic

- Modeling Steps

- Example of Modeling a BU Network

The finalization and ratification of the Network Baseline Standard Architecture was a major accomplishment for the organization because not only did it lay the groundwork for the success of this specific program it also laid the framework for future projects across initiatives such as Wireless and Evolving Security.

Requirements Specification (RFI)

The RFI – Network Security Functional Requirements document was developed next by the Network Security Working Group. The team worked closely together to identify the functional requirements and assign a relative priority of High, Medium, or Low.

RFI Evaluation Criteria

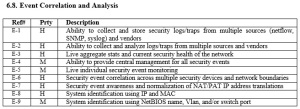

Once RFI was completed and reviewed the Network Security Working Group convened a meeting to establish the RFI response evaluation criteria and scorecard to be used for the analysis of response from bidding vendors. The functional requirements that were originally identified as High were further examined and 19 requirements were selected and rated as MUST by the group. A functional requirement with a MUST designation implied that the associated security device would be eliminated from further consideration if it did not comply.

All functional requirements were then scored with either a maximum possible score of 10, a maximum possible score of 5 or, a maximum possible score of 3 respectively.

Evaluators and Decision-makers

To ensure that the RFI responses were analyzed in an independent and objective manner the Network Security Working Group assigned an Evaluation Team, which comprised primarily of consultants. The Evaluation Team was solely responsible for conducting the RFI response analysis to select vendors and solutions and also performed the network security equipment testing. However the team did not participate in any decision-making and only acted as advisors. The Decision-maker Team is comprised of members of the extended Network Security Working Group (Level 2).

RFI Evaluation Scorecard

The Evaluation Team developed an Evaluation Scorecard that took all response format files from bidding vendors and consolidated them into the Consolidated Vendor Response Form file. This consolidated file contained macros to process the entries from all of the bidding vendors and to create two worksheets, as follows:

- Product Stack Ranking – summary of scores based upon device category

- Vendor Stack Ranking – summary of scores by vendor

RFI Evaluation Methodology

The Evaluation Team adopted an objective method of evaluation focused on the functional requirements as defined by the Network Security Working Group development team, and communicated to the vendors in the Security RFI. It led to the following step procedure.

- Evaluate ‘Best of Breed’ responses to derive the top 3 vendors in each of the following four categories – Firewall, VPN, IPS, and Management.

- If possible, select the 4 most populous vendors from these rankings for inclusion in the network security equipment testing.

- Evaluate ‘Integrated Portfolio’ responses, if any, from all remaining vendors to derive the top 3 portfolio vendors.

- Select the best vendor from this ranking for inclusion in the network security equipment testing.

For quality control a Conformance Check was also conducted to ensure that all ‘Yes’ or ‘Partial’ responses had an associated supporting Response Reference Section and/or comment to backup the statement by the vendor.

Vendor / Product Solution Selection

Of the fifteen network security equipment manufacturers that responded to the RFI, five vendors who best met the functional and operational requirements were invited to participate in the bake-off. Each vendor brought and installed equipment in the lab to allow members of the working group to conduct technical evaluations.

Bake-Off Testing Methodology

The primary goal of the bake-off testing was to further measure the fit of the proposed solutions, with a focus on holistic integration against the functional requirements that were documented in the Network Security RFI. It is interesting to note that most vendors acknowledged that this was the first time ever they had deployed and integrated their solutions holistically and operated them in a real-world scenario. Most vendors had only participated in one-off point solution evaluations.

The bake-off testing objectives sought to identify, test and select one or more manufacturers of network security solutions (Firewalls, IDS/IPS, VPN and, Management/Monitoring) to proactively meet the following goals:

- Secure core business unit network infrastructure devices

- Add network security components to protect and segregate critical assets

- Integrate security components to current network management systems (NMS)

- Develop a Network Security Management System (NSMS).

The evaluation team designed and deployed an inherently insecure Network Security Evaluation Lab that simulates a typical business unit network and provides both the network areas and security zones that need to be protected with sample assets. The testing viewed potential threats and attacks from External (outside the border perimeter) as well as from Internal/Insider (business unit networks) towards Data Center Zone and Management Zone(s) targets.

Test group scenarios were developed that made use of various typical threat and attack categories (e.g., signature based, anomaly based, DoS). The controlled attacks were initiated by a penetration tester from both external and internal sources. In addition, a traffic generation load/stress testing tool was utilized to exercise functionality and simulate normal traffic (client connections and sessions).

The above diagram provides a simplified illustration of the test groups and targets across the Functional Requirement categories, as follows:

- Detection

- Response

- Alert / Logging

- Correlation

- Reporting

- Management

These are the functional requirement categories that are documented in the Network Security RFI and the reference codes refer to the specific line item requirements.

The goal of the bake-off testing was to initiate multiple discrete sets of tests as ‘triggers’ that initiate a sequence of events that then flow through the functional requirements categories and elements in the diagram. The Evaluation team asked the manufacturer to demonstrate if/how the proposed security solution detected, responded, alerted, logged, correlated (where appropriate), and reported as a consequence of these sequences, and how any generated events are managed. The testing also evaluated the utility of the solution as well as factors, such as integration, management and, monitoring. Decoys and scans were used to generate noise while stealthy attacks were employed.

The Evaluation Team was restricted to performing the testing and to providing an objective report to the Network Security WG attendees, in addition to an independent and objective report from the penetration tester and traffic generation tester. The attendees made use of an Evaluation Scorecard and each stakeholder contributed a score.

Evaluation Scorecards

The Evaluation Team developed a set of scorecards that would be used by Network Security WG and business unit stakeholder attendees to the bake-off sessions. There were two scorecards used across two days, as follows:

- Objective Scorecard – Validate Compliance to Requirements

- Business Unit Scorecard – Validate Fit to Business Unit

For the Objective Scorecard the Evaluation team reviewed both tests and vendor demo together with Q&A to determine if the functional requirements were met by the implemented solution as cited by the Vendor in their RFI response. The team referenced the Vendor Summary and RFI Response sheet.

For the Business Unit Scorecard the attendees individually assessed how well the solution satisfies the requirements and fits the needs of their business unit and determined the total category score, per section.

Technical Recommendation

The technical evaluation had consisted of more than 100 evaluation criteria and over 50 repeatable tests conducted on four network security components: Firewall, VPN, IDS/IPS and Management/Monitoring. The RFI was designed to allow the option to select either the best suite of tools from a single manufacturer or to select the ‘best of breed’ (the best components from multiple vendors).

The Evaluation Team’s technical scores were tallied and submitted as a Technical Recommendation to complement the financial total cost of ownership analysis. The Technical Recommendation and Financial Analysis were provided as a summary of the findings and recommendations as an outcome of this evaluation process.

Implementing network security equipment on a business unit network is a large and challenging proposition. The group recommended that there be Alpha and Beta deployments to validate technical elements and further understand the integration complexity with the proposed solution. In addition, the Alpha and beta process helped to develop a deployment methodology which will allow for a deliberate approach for addressing important business unit and organization-wide security concerns.

Next Steps

Architecture Case Study – Part 2 will continue this series to take the reader from the Technical Recommendation on into Baseline Network Standard Design as well as the Deployment strategy with Implementation and Migration process.

Think You’re Secure? Think Again.

Security Architecture Primer

Security Architecture Baseline

Risk-Aware Security Architecture

Develop Security Architecture

Product and Solution Selection

Security Architecture Implementation

Adaptive Security Lifecycle

Thanks for your interest!

Nige the Security Guy.